Reducing Coalescing Operations

In "Coalescing NSOperations", I wrote about coalescing NSOperations; however, during the writing of that post, I began to explore other ways that I could coalesce operations. One solution I discovered has all the benefits of the previous coalescing approach but significantly reduces the amount of effort required by the developer to support coalescing. This new approach is the topic of this post.

Take 2 📽

In "Networking with NSOperation as your wingman", I wrote about combining NSURLSession with NSOperation to wrap networking requests and the processing of that request into one single task.

One advantage that came from this combining was that it was possible to prevent duplicate network requests to the same resource from happening at the same time by coalescing (combining) these duplicate operations together. By preventing these unneeded requests, we save the user bandwidth and also make the app feel faster 🏎️. In this article, I look at how to coalesce operations.

In the below example, you won't see any networking code, as I will focus purely on coalescing. Still, it should be possible to see how combining this example with the example in "Networking with NSOperation as your wingman" can lead to a really performant networking stack.

Making introductions

'To coalesce' is defined by the Oxford Dictionary as to:

combine (elements) in a mass or whole

When we coalesce our NSOperation subclasses, the objective is to only execute the task that the NSOperation instance encompasses once (within the time it takes for that operation to get to the front of the queue and be executed), regardless of how many times the same task was queued. Each request for the task to be queued should be honoured with a coalesced operation triggering multiple callbacks - a callback to each queuer.

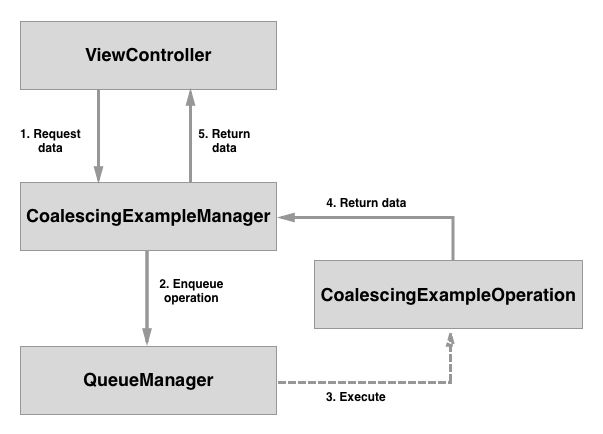

Let's begin to explore how we can do that by looking briefly at the classes we will later explore in more depth:

- QueueManager

- Responsible for queuing or triggering the coalescing of operations and determining if an operation already exists on the queue. Ensures that only unique operations are added to the queue(s).

- CoalescingExampleManager

- Responsible for abstracting away the operation creation and passing that operation to the

QueueManager.

- Responsible for abstracting away the operation creation and passing that operation to the

- CoalescingExampleOperation

- A subclass of

CoalescibleOperationwhich takes the callback closures of the operation and actually does the coalescing.

- A subclass of

Diagrams are always helpful so let's look at how the classes are connected.

Getting to know each other more

Now that the introductions have been made, let's explore what we have:

class CoalescingExampleManager: NSObject {

// MARK: - Add

class func addExampleCoalescingOperation(queueManager: QueueManager = QueueManager.sharedInstance, completion: (CoalescibleOperation.CompletionClosure)?) {

let operation = CoalescingExampleOperation()

operation.completion = completion

queueManager.enqueue(operation)

}

}If you've read the previous post, you will notice that this class is significantly smaller than the earlier version.

Let's break this method down into smaller code snippets and explore each in turn.

class func addExampleCoalescingOperation(queueManager: QueueManager = QueueManager.sharedInstance, completion: (CoalescibleOperation.CompletionClosure)?) {Above is the method's signature, which accepts two parameters: queueManager and completion. queueManager is the object that the soon-to-be-created operation will be enqueued on. completion is a closure that will be called when the operation has finished executing (it's also the way we will coalesce multiple calls together). The CompletionClosure signature is declared in the CoalescibleOperation base/parent class - we will see this class shortly.

The method could accept any closure, but the more generic the closure, the less code we need to write in our coalescing method, as we can pass the generic closures to our parent class - CoalescibleOperation.

let operation = CoalescingExampleOperation()

operation.completion = completion

queueManager.enqueue(operation)Nothing too special in the above code snippet, I only highlight it to draw attention (again) to how little code there is here compared to the previous version.

class QueueManager: NSObject {

// MARK: - Accessors

lazy var queue: NSOperationQueue = {

let queue = NSOperationQueue()

return queue;

}()

// MARK: - SharedInstance

static let sharedInstance = QueueManager()

// MARK: Addition

func enqueue(operation: NSOperation) {

if operation.isKindOfClass(CoalescibleOperation) {

let coalescibleOperation = operation as! CoalescibleOperation

if let existingCoalescibleOperation = existingCoalescibleOperationOnQueue(coalescibleOperation.identifier){

existingCoalescibleOperation.coalesce(coalescibleOperation)

} else {

queue.addOperation(coalescibleOperation)

}

} else {

queue.addOperation(operation)

}

}

// MARK: Existing

func existingCoalescibleOperationOnQueue(identifier: String) -> CoalescibleOperation? {

let operations = self.queue.operations

let matchingOperations = (operations).filter({(operation) -> Bool in

if operation.isKindOfClass(CoalescibleOperation) {

let coalescibleOperation = operation as! CoalescibleOperation

return identifier == coalescibleOperation.identifier

}

return false

})

return matchingOperations.first as? CoalescibleOperation

}

}The QueueManager class is a singleton that holds all of our queues (there is only one in the above example, but it could be extended to contain as many as you need by adding more properties and coming up with a way to identify which queue to use - maybe a job for the CoalescingExampleManager 😉). So let's look through the more unique parts:

func existingCoalescibleOperationOnQueue(identifier: String) -> CoalescibleOperation? {

let operations = self.queue.operations

let matchingOperations = (operations).filter({(operation) -> Bool in

if operation.isKindOfClass(CoalescibleOperation) {

let coalescibleOperation = operation as! CoalescibleOperation

return identifier == coalescibleOperation.identifier

}

return false

})

return matchingOperations.first as? CoalescibleOperation

}The above method determines if a CoalescibleOperation subclass instance exists on the queue and if those instances match the unique identifier of the operation that we are thinking of adding to the queue. If a match is present, that operation is then returned. As we can add both coalescible and non-coalescible operations (some tasks are not applicable for coalescing - we could just give those classes a unique identifier per instance, but that feels very hacky) to the same queue, we need a double filter - on the type of operation and then on the identifier.

func enqueue(operation: NSOperation) {

if operation.isKindOfClass(CoalescibleOperation) {

let coalescibleOperation = operation as! CoalescibleOperation

if let existingCoalescibleOperation = existingCoalescibleOperationOnQueue(coalescibleOperation.identifier){

existingCoalescibleOperation.coalesce(coalescibleOperation)

} else {

queue.addOperation(coalescibleOperation)

}

} else {

queue.addOperation(operation)

}

}In the above method, we can add both straight NSOperation instances or our special CoalescibleOperation subclass instances. If it is a CoalescibleOperation instance, then it's checked to see if it already exists on the queue and if not, it is added to the queue. If it does exist, that operation is coalesced with the existing operation - we will see what this mysterious coalesce method does below. What's interesting to note is that if we determine that the operation already exists on the queue, we actually discard the operation.

class CoalescibleOperation: NSOperation {

typealias CompletionClosure = (successful: Bool) -> Void

// MARK: - Accessors

//Should be set by subclass

var identifier: String!

var completion: (CompletionClosure)?

private(set) var callBackQueue: NSOperationQueue

// MARK: - Init

override init() {

self.callBackQueue = NSOperationQueue.currentQueue()!

super.init()

}

// MARK: - AsynchronousSupport

private var _executing: Bool = false

override var executing: Bool {

get {

return _executing

}

set {

if _executing != newValue {

willChangeValueForKey("isExecuting")

_executing = newValue

didChangeValueForKey("isExecuting")

}

}

}

private var _finished: Bool = false;

override var finished: Bool {

get {

return _finished

}

set {

if _finished != newValue {

willChangeValueForKey("isFinished")

_finished = newValue

didChangeValueForKey("isFinished")

}

}

}

override var asynchronous: Bool {

return true

}

// MARK: - Lifecycle

override func start() {

if cancelled {

finish() //you may want to trigger the completion closures, but it's a project-specific detail, so I will just show the most straightforward approach here

return

} else {

executing = true;

finished = false;

}

}

private func finish() {

executing = false

finished = true

}

// MARK: - Coalesce

func coalesce(operation: CoalescibleOperation) {

// Completion coalescing

let initialCompletionClosure = self.completion

let additionalCompletionClosure = operation.completion

self.completion = {(successful) in

if let initialCompletionClosure = initialCompletionClosure {

initialCompletionClosure(successful: successful)

}

if let additionalCompletionClosure = additionalCompletionClosure {

additionalCompletionClosure(successful: successful)

}

}

}

// MARK: - Completion

func didComplete() {

finish()

callBackQueue.addOperationWithBlock {

if let completion = self.completion {

completion(successful: true)

}

}

}

}

CoalescibleOperation is where we perform the actual coalescing operations. It works by taking the completion closure of the operation to the coalesced and combining this with the existing completion closure of the operation that is in the queue. So, let's break it down into smaller code snippets:

override init() {

self.callBackQueue = NSOperationQueue.currentQueue()!

super.init()

}Here we store what queue/thread the operation was created on so that we ensure any callbacks are executed on that queue.

private var _executing: Bool = false

override var executing: Bool {

get {

return _executing

}

set {

if _executing != newValue {

willChangeValueForKey("isExecuting")

_executing = newValue

didChangeValueForKey("isExecuting")

}

}

}

private var _finished: Bool = false;

override var finished: Bool {

get {

return _finished

}

set {

if _finished != newValue {

willChangeValueForKey("isFinished")

_finished = newValue

didChangeValueForKey("isFinished")

}

}

}

override var asynchronous: Bool {

return true

}

// MARK: - Lifecycle

override func start() {

if cancelled {

finish() //you may want to trigger the completion closures, but it's a project-specific detail, so I will just show the most straightforward approach here

return

} else {

executing = true;

finished = false;

}

}

private func finish() {

executing = false

finished = true

}As the premise of coalescing operations is to better support networking calls, we need to ensure that our operation stays alive while we are waiting for our networking response to be returned. See this earlier post for more details on creating an asynchronous NSOperation subclass.

func coalesce(operation: CoalescibleOperation) {

// Completion coalescing

let initialCompletionClosure = self.completion

let additionalCompletionClosure = operation.completion

self.completion = {(successful) in

if let initialCompletionClosure = initialCompletionClosure {

initialCompletionClosure(successful: successful)

}

if let additionalCompletionClosure = additionalCompletionClosure {

additionalCompletionClosure(successful: successful)

}

}

}Here we are coalescing our operations together. First, we create local variables holding the current operation's completion closure and the to-be-coalesced operation's completion closure (initialCompletionClosure contains the closures of any previously coalesced operations). We do so because we are going to override the current operation's completion closure with a new closure. This new closure contains calls to both older closures. Inside the closure, we check that each closure actually exists (these closures, after all, are optional) and if they exist, we trigger them. When this closure is triggered, any nested closures (those held in initialCompletionClosure) will be executed, ensuring that we can coalesce more than two operations. What's important to note is that this method will handle situations where an operation hasn't provided a completion closure, and the other operation has.

func didComplete() {

finish()

callBackQueue.addOperationWithBlock {

if let completion = self.completion {

completion(successful: true)

}

}

}A convenience method to trigger the completion of the operation and ensure that all closures are called on the correct queue/thread. As this is asynchronous, we need to leave the operation in the finished state when the operation's task is complete. Calling finish will do this and ensure that the operation is removed from the queue.

Bringing it to an end

With this approach, we can ensure that we only execute unique operation within the context of the queue while still supporting parallel processing overall. In the above example, we only support one queue and one type of closure, but the QueueManager can easily be extended by adding more properties with the same pattern as shown.

However, nothing comes free of charge. We can see in the above that scheduling an operation requires more setup code than a non-coalesced operation, and where there is code, there is always the chance for bugs 🐜.

To see the above code snippets together in a working example, head over to the repository and clone the project.